suppose, like me, you’re a crazy person who likes to make 2D games with MonoGame, instead of a super-popular and full-featured IDE like Unity >_>

hello, fellow crazy person!

it’s fun doing stuff yourself, right? it’s slower, and harder, but that’s fine: we’re LEARNING stuff. also, we get full control over things, like the input system (which Unity has its own ideas about that we might find cumbersome for our particular project).

one of the hardest things – for me – has been using PIXEL SHADERS in MonoGame. pixel shaders have their own language, and are pretty low-level, which makes information on them harder to find than, say, how to get input from your gamepad (something EVERYONE wants to do, and which is pretty easy TO do). to top it off, MonoGame has a couple of its own little quirks in how it uses pixel shaders, and its toolset is VERY bad at providing error messages when your pixel shader code is bad! put all this together, and it can be really hard to get started using pixel shaders in MonoGame!

I hope this tutorial can you help you out with that!

Assumptions

I’m going to assume that you know C#, and have followed a couple basic MonoGame tutorials. maybe you even know how to load sprites and move them around the screen! (if so, you’re a little ahead of the game, because the first thing I’m going to show you is…)

… Loading & Drawing Sprites!

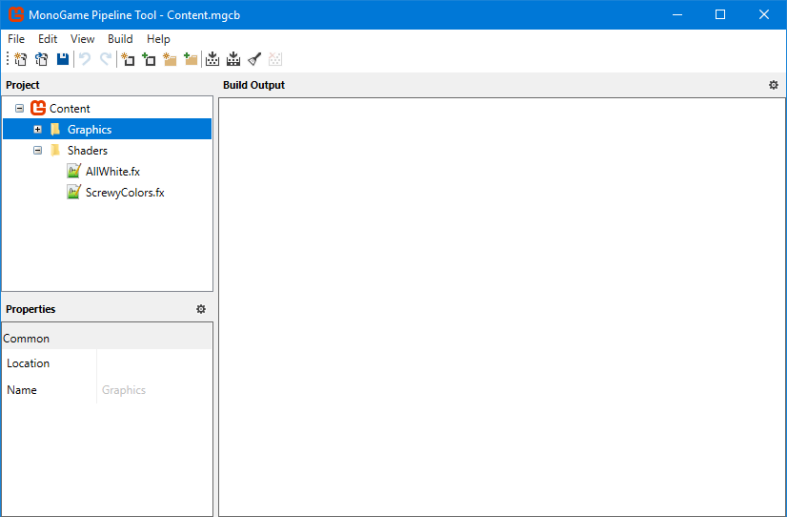

first, you’re going to need at least a sprite, and a pixel shader. you should have a Content.mgcb file, which, when opened, yields something like this:

if you don’t have this file, or it won’t open in the MonoGame Pipeline Tool, you may have created a blank C# project, instead of using a MonoGame template. you CAN create & setup this file manually, but that’s a whole other weird thing. I may post about how to do that another time; for now, you’ll have to google up some help… or just start over again with a new C# project using a MonoGame template!

also: the screenshot above shows off some pixel shaders. don’t worry that you don’t have any yet. we’ll get to that!

ALRIGHT: let’s load up a sprite (assuming you have one called “Graphics/Characters/LittleDude.png”)

Texture2D littleDude = Content.Load<Texture2D>("Graphics/Characters/LittleDude");

remember that MonoGame doesn’t care about your assets’ file extensions. LittleDude here may be called “LittleDude.png” on the disk, but when asking MonoGame to load content files, you leave the file extension off!

to draw this sprite on the screen, you’d write something like…

SpriteBatch.Begin(SpriteSortMode.Deferred, BlendState.AlphaBlend, SamplerState.PointClamp);

SriteBatch.Draw(littleDude, new Vector2(150, 50), new Rectangle(0, 0, 20, 20), Color.White);

SpriteBatch.End();

SpriteBatch.Begin(...) BEGINS the drawing process.

SpriteSortMode.Deferred means that the sprites will not be drawn until SpriteBatch.End() is called. this improves performance.BlendState.AlphaBlend means that the alpha channel of the sprite should be respected, and partially-transparent pixels will be blended with whatever is underneath.SamplerState.PointClamp means that if we sretch an image, we want a pixelated effect, rather than any kind of smoothing/blurring. I’ve got a hard on for the pixel aesthetic, but you might want something different for your game.

SpriteBatch.Draw(...) draws a sprite!

littleDude is the sprite to draw; the one we loaded earlier!new Vector2(150, 50) represents where on the screen to draw the image.new Rectangle(0, 0, 20, 20) represents a rectangle of pixels from within the littleDude image to draw. for example, LittleDude.png might be an 80×20 image, where each 20×20 square contains the dude facing in a different direction.Color.White represents the TINT to apply to the sprite. using full white leaves the image unaltered.

SpriteBatch.End tells MonoGame that we’re done with this batch, and it should draw everything out to the screen.

Why Even Shade Pixels?

pixel shaders make it easy to do some cool things. here’s a few examples:

- let players customize the skin, hair, & clothes colors of their character’s sprite (with minimal code)

- flash the character’s sprite all white, to indicate receiving damage, invincibility, or some other effect, WITHOUT hand-creating all-white versions of your sprites.

- you could make the whole screen wobble & wave, get super-pixely, or invert a bunch of colors, or some other visual effect like that. (perhaps as part of some status effect?)

- similarly, you could render everything in grayscale, perhaps to represent a scene from a memory.

some of these things you might imagine being able to code up yourself, but pixel shaders often let you do these things with WAY less code. also, pixel shaders are processed by the GPU, in parallel with whatever the CPU is doing. ALSO also, GPUs are CUSTOM-BUILT to churn through pixel shaders like they’re nothing. (more on this later!)

Writing a Pixel Shader

you’re probably pretty comfy making littleDude.png, even if it’s just in MSPaint, but how do we make a pixel shader?

let’s start with a simple one: a shader that turns all the pixels in your sprite white (while still respecting alpha transparency!) this is a great way to indicate to the player that a character has received damage, and/or to show that a character is invulnerable. it’s also a good introduction to pixel shaders, so let’s get to it!

sampler inputTexture;

float4 MainPS(float2 textureCoordinates: TEXCOORD0): COLOR0

{

float4 color = tex2D(inputTexture, textureCoordinates);

color.rgb = 1.0f;

return color;

}

technique Techninque1

{

pass Pass1

{

PixelShader = compile ps_3_0 MainPS();

AlphaBlendEnable = TRUE;

DestBlend = INVSRCALPHA;

SrcBlend = SRCALPHA;

}

};

look weird? don’t worry, we’ll go over it together, BUT: I do want to throw a little disclaimer out there: I know some things about pixel shaders, but I also DON’T know some things! this particular shader has several lines that I cannot fully explain to you; I found them online, and I know they work, but I’m NOT aware of the full range of options, etc. I’ll definitely call these out as we get to them; feel free to google up more info on your own!

good?

good!

let’s take it from the top.

sampler inputTexture; at the top of any pixel shader, you can define all kinds of what look like global variables. these are actually PARAMETERS for the entire pixel shader. MonoGame provides a way for you to pass data into a pixel shader. MonoGame ALSO expects your pixel shaders to have a “inputTexture” parameter of type “sampler”, and it will set this parameter for you, without you needing to ask it to. this parameter, as you may have guessed, is a reference to the image which the shader is being applied to.float4 MainPS(...): COLOR0 is the definition of our pixel shader function. the name “MainPS” is not special; it can be whatever you want. the return type, however, must be of type “float4”. a “float4” is an exciting pixel shader data type. think of it as a struct or class with four member variables which are all floats. so far that sounds normal, however the way pixel shaders allow you to access and manipulate the member variables gets weird, as we’ll soon see! anyway: your pixel shader is expected to return a color – the new color for your pixel – and a color is simply four floats: r, g, b, and a. finally: “COLOR0”. this is one of those things I don’t really understand. can you leave it off entirely? I don’t even know. I haven’t tried. feel free to experiment 😛float2 textureCoordinates: TEXCOORD0 – the parameter for MainPS – is a “float2” representing where in the image we’re pulling pixel data from. this might start to give you an idea of how this method is going to be used, but I’ll just tell you: MainPS is going to be called for every pixel in your image. it’s given the coordinate of the pixel, and expected to return the color you’d like to use for that pixel. a pixel shader COULD simply read the color of the pixel at the given coordinate, and return it, but that wouldn’t be a very interesting pixel shader; we’ll be doing more-interesting things.float4 color = tex2D(inputTexture, textureCoordinates); alright: so here we’re grabbing the color of the image at the given coordinate. “tex2D” is a pixel shader built-in method that accepts an image, and a coordinate, and returns the color of the pixel at that location. we’re storing the result in a new “float4” called “color”, because we’d like to do something before returning it.color.rgb = 1.0f; okay, wtf is up with this syntax? you’re probably thinking “what? there’s a variable called ‘rgb’, and I’m assigning 1.0 to it. what’s the big deal?” the big deal is that this is not what’s happening. a “float4” has four properties, and they can be referred to individually as r, g, b, and a, OR as x, y, z, and w. for example, “color.y = 0.5f;” is valid code, and would set the second value (which happens to be green) to 0.5. you can also combine any of the properties you want, for example “color.ra = color.g” would set the red and alpha components to whatever the green component is. weird! it gets weirder, too, but we’ll get there later. for the purposes of this shader, we’re setting r, g, and b all to 1.0 (full white), and leaving alpha alone! (note: this syntax hints at the GPU’s power of parallelization that makes it so fast. GPUs are designed to operate on lists of complex structures all at once by using their HUGE internal bandwidth.) return color; we’ve altered the underlying color – it’s now full white – so we return it! done!

well, except we’re not QUITE done. that’s the logic of the pixel shader, but there’s also some setup we have to do. here’s what that looks like, again, for easy reference:

technique Techninque1

{

pass Pass1

{

PixelShader = compile ps_3_0 MainPS();

AlphaBlendEnable = TRUE;

DestBlend = INVSRCALPHA;

SrcBlend = SRCALPHA;

}

};

we have to give the GPU a little bit of meta data about how to use our MainPS function. this is where my personal knowledge really starts to break down, but I’ll explain it as best I can.

technique Techninque1 ... pass Pass1 so: I do know that the name of the technique does not matter, nor does the name of the pass inside. and I know you can have multiple passes in a technique (and I know they can be individually refered to in C#/MonoGame). but the full, formal definition of a “technique” and a “pass” I am NOT aware of. for everything I’ve encountered so far, however, just copy-pasting this basic technique/pass block setup has worked fine.PixelShader = compile ps_3_0 MainPS(); defines which function to actually call for this pixel shader. in this case, MainPS. again: we could have called it anything (“AllWhite” might have been a better name, for example…), so long as the names match. the compile ps_3_0 part tells the MonoGame pipeline how to compile this pixel shader. specifically, “ps_3_0” refers to pixel shader version 3.0. you may have noticed video cards bragging about supporting such-and-such version of pixel shader, or games listing some pixel shader version among their requirements. congratulations! your game now requires pixel shader 3.0! I am not aware of the differences between the various pixel shader versions, but I have been unable to compile a MonoGame project using ps_4_0, and I’ve seen very few examples online of ps_2_0 code, so I’m just sticking with ps_3_0.AlphaBlendEnable = TRUE; DestBlend = INVSRCALPHA; SrcBlend = SRCALPHA; I’m going to cover all of these at once by saying “I have no clue what these really mean.” I mean, from the names and values, we can infer that they enable alpha blending, somehow. in fact, without these lines, a pixel shader will not pull in the alpha value for any of the pixels when using the tex2D function, causing all transparent areas to become solid after passing through the shader. but what other properties exist? why “INVSRCALPHA”? are there other useful values? do we really need all three? unfortunately, I have no idea 😛

hopefully this pixel shader is making a bit more sense to you. feel free to scroll back up and take another look at the code. in fact: feel free to copy this shader wholesale into your own game.

and if you’re not turned off by my lack of knowledge on all the details, let’s move on to actually APPLYING this pixel shader to a sprite!

Loading & Applying a Pixel Shader

ALRIGHT: let’s load that pixel shader up!

this is done very similarly to how you load sprites, or any other content, in MonoGame: add it to your Content.mgcb file, then load it in code with Content.Load:

Effect allWhite = Content.Load<Effect>("Shaders/AllWhite");

done!

applying shaders is a little weird, however. you might expect to do it while drawing an individual sprite, but actually, it’s done as part of a SpriteBatch.Begin call:

SpriteBatch.Begin(SpriteSortMode.Deferred, BlendState.AlphaBlend, effect: allWhite);

SpriteBatch.Draw(littleDude, new Vector2(150, 50), new Rectangle(0, 0, 20, 20), Color.White);

SpriteBatch.End();

P.S. if the effect: allWhite syntax looks strange to you… yeah, it’s a little strange 😛 it’s an uncommon – but useful! – bit of C# syntax that lets you skip tons of optional parameters, and pass in values only for the ones you actually care about. here’s a contrived example that illustrates this:

void CrazyMethod(int a, int b = 2, int c = 0, float? d = null, float e = 5);

...

CrazyMethod(10, d: 5);

CrazyMethod has a lot of optional parameters, and we only wanted to set a value for d. thanks to this helpful C# syntactical goodness, we’re able to easily do this!

back to pixel shaders:

because we have to define the effect as part of SpriteBatch.Begin, and because there is some overhead in starting and ending batches, we would LIKE to batch up as much sprite-drawing as possible! you might think “oh, I’ll write a helper method to draw sprites, which takes an Effect as a parameter, and wraps the Draw call in a SpriteBatch.Begin and SpriteBatch.End”, but you ABSOLUTELY DO NOT WANT TO DO THIS. imagine drawing a level out of 32×32 tiles… if your game runs at 1080p, you might have have about 2400 tiles on screen at a given time, and that’s just one layer of tiles! doing a SpriteBatch.Begin and SpriteBatch.End for each and every draw will slow things down A LOT.

conceptually, however, we’re going to be THINKING about drawing sprites one at a time, which means we’ll often want to write code this way, too. for example: every player and enemy on the screen MIGHT blink white to indicate damage, but you won’t know until you get to that particular character. also, you probably actually really care about the order your sprites are drawn in, for example a reasonable draw order for your game might be:

- background terrain

- enemies

- bullets

- players

- foreground terrain

- UI elements

maybe a player is blinking white (which also causes a bit of UI related to the player – their health bar – to also blink), and so is an enemy. to reduce SpriteBatch Begin/End calls, it’d in some senses be optimal to group up all three of these, but not only would you need to add a lot of code just to accomplish this grouping, you obviously do not want to sacrifice your draw order just to batch things up (placing the player in front of the foreground terrain, or the UI behind the foreground terrain, would be madness).

so we DON’T want to do tons of SpriteBatch Begin/End blocks, but we ALSO can’t group everything up all the time, AND we want to be able to just kinda’ draw sprites one at a time without thinking too hard about it… how do we accomplish this?

not to worry: we can write some code that helps us draw sprites in a conceptually-one-at-a-time way, without worrying about SpriteBatch Begin/End calls, AND with a touch of extra smarts to reduce SpriteBatch Begin/End calls. it won’t be 100% optimal, but unless your game goes absolutely HAM on the pixel shaders, a handful of extraneous SpriteBatch Begin/End blocks isn’t going to murder you (a handful is way less than 2400+!)

here’s a simple implementation. (we’ll expand on it a little later, when we get to full-screen shaders, but don’t worry about it 😛 we’ll get there!)

Effect? currentEffect = null;

void StartDrawing()

{

currentEffect = null;

SpriteBatch.Begin(SpriteSortMode.Deferred, BlendState.AlphaBlend, effect: currentEffect);

}

void DrawSprite(Texture2D sprite, int x, int y, int spriteX, int spriteY, int spriteWidth, int spriteHeight, Effect? effect = null)

{

if(currentEffect != effect)

{

currentEffect = effect;

SpriteBatch.End();

SpriteBatch.Begin(SpriteSortMode.Deferred, BlendState.AlphaBlend, effect: currentEffect);

}

SpriteBatch.Draw(sprite, new Vector2(x, y), new Rectangle(spriteX, spriteY, spriteWidth, spriteHeight), Color.White);

}

void FinishDrawing()

{

SpriteBatch.End();

}

so what’s going on here? how do we use this?

the idea is to End and Begin a new SpriteBatch only when you need to switch shaders. for example, when you’re drawing all the background terrain tiles in a level, you may not change pixel shaders at all. if we use the above code (I’ll show an example of that shortly) in such a situation, it will correctly never End and Begin a second SpriteBatch. at the same time, when we switch to drawing enemies and things, it might get a little mixier, but the above code will handle this as well, ending and starting new sprite batches as pixel shaders change. you may end up with a few extraneous batches in the end, but most games are going to have way fewer enemies on-screen than players, anyway.

an example use of the above helper methods would look something like this (the following code is incomplete):

void DrawScene()

{

StartDrawing();

for(int y = 0; y < levelHeight; y++)

{

for(int x = 0; x < levelWidth; x++)

{

DrawSprite(levelTileSpriteSheet, x * 32, y * 32, XXXX, YYYY, 32, 32);

// ^ figure out the sprite sheet offsets XXXX & YYYY based on your level's tilemap

}

}

foreach(var enemy in enemies)

{

DrawSprite(...); // draw each enemy on the screen, according to whatever logic they need

}

foreach(var bullet in bullets)

{

DrawSprite(...); // draw each bullet on the screen, according to whatever logic THEY need

}

// draw the player, accounting for whether or not they're invulnerable:

DrawSprite(littleGuy, littleGuyX, littleGuyY, 0, 0, 20, 20, littleGuyIsInvulnerable ? allWhite : null);

// maybe draw some UI stuff here...

FinishDrawing();

}

the DrawSprite helper method does the hard work of tracking what the currently-applied “Effect” (pixel shader) is, and only ending and beginning a new sprite batch when that changes. this frees us from worrying about these deetails, and can simply draw sprites with whatever shader we want, as we want.

Pixel Shader Parameters (and a Full-Screen Shader!)

a while ago, I mentioned that pixel shaders can take PARAMETERS. let’s see how to do that with a shader that over-pixelates your image to varying degrees.

by “over-pixelate”, I mean that every square of four (or 9, or 16…) pixels will take on the color of a single pixel within that square. this could be used as part of a pixelating transition (ex: start a scene by applying crazy-high pixelation, and then reduce the pixelation until you reveal the true image), or perhaps as part of a damage visual effect (briefly pixelate the screen when the player takes damage), etc.

here’s the shader code:

sampler inputTexture;

int pixelation;

float4 MainPS(float2 originalUV: TEXCOORD0): COLOR0

{

// my game runs at 960x540; change to reflect the resolution YOUR game runs at

originalUV *= float2(960, 540);

float2 newUV;

newUV.x = round(originalUV.x / pixelation) * pixelation;

newUV.y = round(originalUV.y / pixelation) * pixelation;

// again: change this to match your screen's resolution

newUV /= float2(960, 540);

return tex2D(inputTexture, newUV);

}

technique Techninque1

{

pass Pass1

{

PixelShader = compile ps_3_0 MainPS();

}

};

there’s some interesting things going on here. let’s break it down:

int pixelation; represents a parameter for the shader. we haven’t talked about how to use it yet, but don’t worry: we’ll get there soon!float4 MainPS(float2 originalUV: TEXCOORD0): COLOR0 is our pixel shader function declaration again. here, I’ve called the texture coordinate “originalUV”, instead of “textureCoordinates”. the name is not important; honestly, the only reason I’m using “originalUV” here, is because this pixel shader is a modification of another I found online, and in that original shader, the parameter was called “originalUV”, and I don’t really care what that parameter is called. (as long as it’s not some garbage abbreviation like “origTexCoor”; P.S. graphics people really like to call X and Y coordinates U and V for some reason. I don’t know why. you may have seen things like “UV mapping” in 3D modeling programs, for example. I believe Unity uses UV as well. whatever.)

originalUV *= float2(960, 540) just like you can assign to multiple properties at once (remember color.rgb = 1.0?), you can perform all kinds of other math on muliple properties, as well! this line multiplies the first part of originalUV (x) by the first part of float2(960, 540), which is 960, and the second part (y) by the second part (540). why do we do this step? something I haven’t mentioned before is that texture coordinates always range from 0.0-1.0, no matter the dimensions of the texture. if your texture is 1250×10, then texture coordinates 0.5,0.5 refer to pixel 625,10 (or maybe 624,9 – whatever). but we’re dealing with pixelation here, so we really want to think of our texture in terms of its pixels. multiplying this 0.0-1.0 coordinate value by the dimensions of the source image – which this shader assumes is 960×540 (the size of my game’s screen) – turns the value into a pixel value that we can work with.- then we do the pixelation magic! divided by the pixelation factor, round, and multiply by the pixelation factor. if you haven’t seen math like this before, it’s easier to think about it one coordinate at a time, for example, suppose the pixelation is “3”, meaning every 3 pixels in a line should be the same color. now think of what happens if we take pixels 11, 12, and 13 and run them through this math. first, we divide by 3, and get 3.66, 4, and 4.33. rounding all of those values yields 4. multiply by 3 again, and we’re at pixel 12, for all three pixels. when we use this new value to do a pixel lookup, it means that pixels 11, 12, and 13 will all use the color from pixel 12! similarly, pixels 14, 15, and 16 will all become 15. now, if we do the same on a second axis, we get 3×3 squares where all pixels of that square are the color of the center pixel from the original image!

- divide by float2(960, 540) to scale back to the 0.0-1.0 range that pixel shaders expect

- use

tex2D to return the color of the texture at the divided, rounded, and multiplied location, which finally achieves the pixelation effect!

the “technique” and “pass” stuff you’ve seen before, so I won’t go over it again, however note that THIS time all that alpha stuff has been left out. why? because I’m intending to use this shader on the WHOLE screen. I’m not sure how much extra work it adds to the GPU to ask it to think about alpha blending, but since I know I’m not going to need it, I’m not going to spare the GPU the trouble of thinking about it.

so now let’s see how to tell this shader what “pixelation” value you want. this is a shader parameter, and MonoGame makes it pretty easy to use these.

suppose you loaded this shader in this way:

Effect pixelationShader = Content.Load("Shaders/Pixelate");

to set a parameter:

pixelationShader.Parameters["pixelation"].SetValue(3);

now apply the shader as before:

DrawSprite(littleGuy, littleGuyX, littleGuyY, 0, 0, 20, 20, pixelationShader);

but wait: weren’t we going to pixelate the WHOLE display with this shader? the above code would only pixelate littleGuy!

for full-screen shaders, you’ll need to change how you draw. previously, we did this:

StartDrawing();

// draw a ton of sprites, maybe with effects

FinishDrawing();

we’d LIKE to be able to pass a shader into FinishDrawing, to tell it “hey: finish drawing, but also, do some full-screen pixel shader”. something like:

StartDrawing();

// draw a ton of sprites, maybe with effects

// apply the pixelationShader, using a pixelationFactor variable from code:

if(pixelationFactor > 1)

{

pixelationShader.Parameters["pixelation"].SetValue(pixelationFactor);

FinishDrawing(pixelationShader);

}

else

{

FinishDrawing();

}

however, FinishDrawing doesn’t support an optional shader argument. also, I wasn’t lying earlier when I said that pixel shaders have to be passed in to SpriteBatch.Begin calls. they really do. so how is passing a shader into FinishDrawing supposed to help anything?

the answer is RENDER TARGETS.

Render Targets?!

render targets.

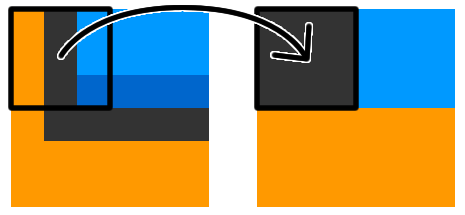

a render target is an object you can ask MonoGame to draw to, instead of drawing to the screen. it’s a special bit of memory typically kept in the GPU to make things as speedy as possible. once you’ve drawn there, you can then draw the entire render target to the screen. this additional draw will give us an opportunity to do a SpriteBatch.Begin, which is where we’ll pass in a shader, causing it to be applied to the entire screen!

you can use render targets to do other cool things, too. for example in a split-screen co-op game, it makes a lot of sense to give each player a render target that’s half the size of the physical screen, draw each players’ view to their individual render target, then draw each render target to a different place on the physical screen.

minimaps are another potential use for render targets.

anyway, here’s an updated copy of the helper methods (StartDrawing, FinishDrawing, etc) from above, this time with a render target, and a FinishDrawing method that accepts a pixel shader.

Effect? currentEffect = null;

RenderTarget2D renderTarget;

// you'll need to call this once, before you start drawing!

void Initialize()

{

// my game happens to run at 960x540; yours may run at a different resolution! change this accordingly:

renderTarget = new RenderTarget2D(GraphicsDevice, 960, 540, false, GraphicsDevice.PresentationParameters.BackBufferFormat, DepthFormat.Depth24);

}

void StartDrawing()

{

currentEffect = null;

// draw to the renderTarget, instead of to the screen:

GraphicsDevice.SetRenderTarget(renderTarget);

SpriteBatch.Begin(SpriteSortMode.Deferred, BlendState.AlphaBlend, effect: currentEffect);

}

void DrawSprite(Texture2D sprite, int x, int y, int spriteX, int spriteY, int spriteWidth, int spriteHeight, Effect? effect = null)

{

if(currentEffect != effect)

{

currentEffect = effect;

SpriteBatch.End();

SpriteBatch.Begin(SpriteSortMode.Deferred, BlendState.AlphaBlend, effect: currentEffect);

}

SpriteBatch.Draw(sprite, new Vector2(x, y), new Rectangle(spriteX, spriteY, spriteWidth, spriteHeight), Color.White);

}

void FinishDrawing(Effect? fullScreenShader = null)

{

SpriteBatch.End();

// no more render target; we'll now draw to the screen!

GraphicsDevice.SetRenderTarget(null);

SpriteBatch.Begin(SpriteSortMode.Deferred, BlendState.AlphaBlend, effect: fullScreenShader);

// again: change 960x540 to match your resolution. consider keeping the resolution values in a couple "const"s somewhere, so you can easily change them later, if you want.

SpriteBatch.Draw(renderTarget, new Vector2(0, 0), new Rectangle(0, 0, 960, 540), Color.White);

SpriteBatch.End();

}

you can see that we now track a new object: a RenderTarget2D. we have to initialize this thing before we can use it; that’s what the new Initialize method does. (make sure to call Initialize – just once – before you start drawing anything!)

this new StartDrawing method now instructs MonoGame to draw everything to the renderTarget, instead of the default location (the screen). FinishDrawing has been updated to then draw renderTarget to the screen, applying the given pixel shader (if any).

the SpriteBatch.Draw call insider FinishDrawing is exactly like the call we make for drawing any other sprite, but ask it to draw from our renderTarget instead of from our littleGuy (or whatever other) sprite. in fact, SpriteBatch.Draw is capable of drawing from Texture2D objects, RenderTarget2D objects, and a few others as well.

you can see how this starts to allow you to easily make a split-screen co-op game, too! if you created TWO RenderTarget2D objects whose widths were half the screen (480, in this case), you could put them side-by-side in the FinishDrawing method with something like:

// assume renderTarget is now an array or list of RenderTarget2D objects, each 480x540 in size:

SpriteBatch.Draw(renderTarget[0], new Vector2(0, 0), new Rectangle(0, 0, 480, 540), Color.White);

SpriteBatch.Draw(renderTarget[1], new Vector2(480, 0), new Rectangle(0, 0, 480, 540), Color.White);

Other Shader Possibilities: Character Customization

a while ago I mentioned that pixel shaders could be useful for making customizable character sprites.

here’s the idea:

- draw a grayscale person sprite in your favorite drawing program

- make sure that all the hair pixels are all the same shade of gray

- make sure that all the shirt pixels are all the same different shade of gray

- etc, for whatever parts of the sprite you want to be customizeable

- write a pixel shader that has some color parameters – ex: “float4 hairColor;” “float4 shirtColor;” etc – and replaces the grays in the character sprite with the colors passed in

pixel shaders allow “if” statements, so it’s pretty easy to read the value of each pixel in the texture, and replace with a passed-in color, ex:

float4 color = tex2D(inputTexture, textureCoordinates);

if(color.r == 10) color.rgb = hairColor.rgb;

else if(color.r == 30) color.rgb = shirtColor.rgb;

// etc.

return color;

in this way, you can let your players choose any colors they want for hair, clothes, skin, etc, and it all works with a single sprite and a single pixel shader.

I haven’t written this particular shader myself, so I can’t give you more-complete code, but it should be fairly easy to figure out, and examples of this kind of shader can be found online.

One More Pixel Shader For You: Grayscale

this one I HAVE written, so can give you.

possible uses include: grayscale a single sprite (zombie player!) or the entire screen (flashback!)

sampler inputTexture;

float4 MainPS(float2 textureCoordinates: TEXCOORD0): COLOR0

{

float4 color = tex2D(inputTexture, textureCoordinates);

// does this look weird? more on this later:

color.rgb = color.r * 0.2126 + color.g * 0.7152 + color.b * 0.0722;

return color;

}

technique Techninque1

{

pass Pass1

{

AlphaBlendEnable = TRUE;

DestBlend = INVSRCALPHA;

SrcBlend = SRCALPHA;

PixelShader = compile ps_3_0 MainPS();

}

};

this is very similar to the AllWhite shader from before; so similar, I’m not going to step through everything it does. HOWEVER: what’s up with that weird grayscale math? 0.2126 and all that…

the intuitive answer to “how do I turn RGB into gray” would be to simply average the red, green, and blue components; ex: (color.r + color.g + color.b) / 3, however, human eyes are not sensitive to all waves of light equally, and monitors were unfortunately NOT designed to scale red, blue, and green in ways that match what human eyes and brains perceive. so we need to do a little extra math to grayscaleify things in a way that matches what human eyse and brains expect.

you can find this “red * 0.2126, etc” formula, and an explanation about its exact values, on various places around the internet. here’s one such place: https://en.wikipedia.org/wiki/Grayscale

And That’s It!

I hope this tutorial has been helpful for you! I had a lot of trouble finding information on how to get pixel shaders working in MonoGame. hopefully this article can be useful to some people, including my future self!

unfortunately, I cannot offer timely support on anything written here, but if you spot any errors, or have any questions, definitely send them my way! I’ll read them (eventually!) and update this article if needed!